Research

We are living in a big data era - we are more than any time surrounded by applications of data-driven methods, e.g., large language models, recommender systems, and facial recognition. A common feature of these widespread applications is that the data-driven decision-making agents are living in a digital world in the sense that they do not have direct physical interactions with our environment. We, as human beings, are less sensitive to the possible mistakes made by them in these scenarios. However, there are many others in which the data-driven decision-making agents have to interact directly with our physical world, and mistakes can hardly be tolerated and may lead to non-invertible damages. For instance, in power grids, a single failure in one part might lead to chain reactions and finally result in the collapse of the whole grid (see e.g. 2025 Iberian Peninsula blackout). When applying data-driven methods to make decisions for physical systems, we often care more about whether the system will be run in a stable and safe manner, rather than only assessing how optimal these decisions are. Deep learning for autonomous driving serves as a good example, in which the top priority would be keeping the vehicle inside the safe region and avoiding collisions with the environment and other vehicles. Similar examples also include reinforcement learning for power systems control, where ensuring the safe operation of power systems (e.g., frequency and voltage are kept within safe limits) in both policy training and implementation is the primary requirement.

While this issue has been well recognized in the contemporary machine learning community, most of the efforts are devoted to carefully architect loss functions by incorporating physical prior and then learn polices that tend to be reliable. However, little is known about where the reliability comes from. A fundamental question naturally arises:

-

Make data-driven methods trustworthy by design. For most physical systems in real world, data in extreme/unsafe scenarios is rarely available as the cost might be breaking down the whole system. Purely relying on training to learn how to handle such scenarios is not trustworthy. By understanding this connection, we can appropriately design the policy structure (e.g., neural network architecture), so that the policy itself inherits the certain properties that ensure corresponding system behavior during and after learning.

-

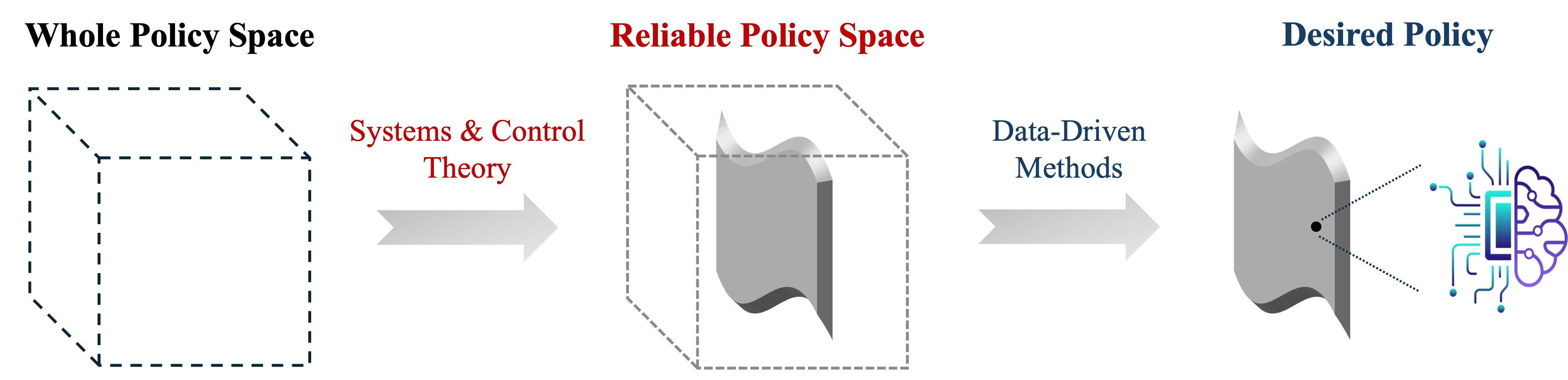

Improve efficiency of data-driven methods. Such understandings also enable us to identify a reduced policy space in which the policies are understood to lead desired system behavior as we want. Searching only within this reduced policy space helps to filter policies that may conflict with the physical nature and result in damages to the underlying system, thereby eliminating unnecessary trial-and-error and enabling more efficient policy exploration.

As a researcher in systems & control, my primary goal is to bridge this gap by connecting systems & control theory and data-driven methods. Indeed, systems & control theory provides classical and powerful tools to characterize the relationships between policy property and system performance in terms of stability, safety, and robustness, etc. These classical concepts potentially bring exciting perspectives in rethinking the policy design in combination with newly emerged data-driven techniques.

In the past five years, I aimed to answer the question raised above using different tools from systems & control theory, ranging from behavioral systems theory to Lyapunov stability, safety-critical control, robust control theory, and so on. Then, I applied these understandings in various data-driven policy learning setups, e.g., direct data-driven learning and machine learning, to design efficient and reliable decision-making mechanisms. The below figure illustrates the core idea of my PhD thesis. My research works can be classified into two categories, according to two different perspectives to identify the reliable policy space. One on direct data-driven learning, where the reliable policy space is identified using behavioral system methods and it seeks for a unified and general framework tackling with general complex nonlinear systems. Another on machine learning, where the identification is performed using state-space control-theoretical methods and designs are subject to specific systems models. In this category, I focus on power system application examples.

Z. Yuan, “Data-Driven Learning and Control: Formal Guarantees and Applications to Power Networks,” Ph.D. thesis, University of California, San Diego, 2025. [Link]

From behavioral systems theory to direct data-driven learning for complex systems

Z. Xiong, Z. Yuan, K. Miao, H. Wang, J. Cortés, and A. Papachristodoulou, “Direct Data-driven control for nonlinear systems via Koopman bilinear realization,” 2025. Working Paper.

Z. Xiong, Z. Yuan, K. Miao, H. Wang, J. Cortés, and A. Papachristodoulou, “Data-enabled predictive control for nonlinear systems based on a Koopman bilinear realization,” in IEEE Conf. on Decision and Control, Rio de Janeiro, Brazil, Dec. 2025. [arXiv]

Z. Yuan and J. Cortés, “Data-driven optimal control of bilinear systems,” IEEE Control Systems Letters, 6: 2479-2484, 2022. [IEEE Xplore, arXiv]

From state-space control theory to machine learning for power systems

Frequency control

Z. Sun*, Z. Yuan*, C. Zhao, and J. Cortés, “Learning decentralized frequency controllers for energy storage systems,” IEEE Control Systems Letters, 7: 3459-3464, 2023. [IEEE Xplore] (Joint w/ ACC'24)

Z. Yuan, C. Zhao, and J. Cortés, “Reinforcement learning for distributed transient frequency control with stability and safety guarantees,” Systems & Control Letters, 185: 105753, 2024. [ScienceDirect, arXiv]

Voltage control

Z. Yuan, J. Feng, Y. Shi, and J. Cortés, “Stability constrained voltage control in distribution grids with arbitrary communication infrastructure,” IEEE Transactions on Smart Grid, 2025. To appear. [IEEE Xplore, arXiv]

Z. Yuan, G. Cavraro, and J. Cortés, “Learning stable Volt/Var controllers in distribution grids,” Big Data Application in Power Systems (Second Edition), ed. R. Arghandeh and Y. Zhou, Elsevier Science, Netherlands, 2024. [ScienceDirect]

Z. Yuan, G. Cavraro, A. S. Zamzam, and J. Cortés, “Unsupervised learning for equitable DER control,” Electric Power Systems Research, 234: 110634, 2024. [ScienceDirect, arXiv] (Joint w/ PSCC'24)

Z. Yuan, G. Cavraro, and J. Cortés, “Constraints on OPF surrogates for learning stable local Volt/Var controllers,” IEEE Control Systems Letters, 7: 2533-2538, 2023. [IEEE Xplore, arXiv] (Joint w/ CDC'23)

Z. Yuan, G. Cavraro, M. K. Singh, and J. Cortés, “Learning provably stable local Volt/Var controllers for efficient network operation,” IEEE Transactions on Power Systems, 39(1): 2066-2079, 2024. [IEEE Xplore, arXiv, CDC'22]